B-Trust

To make use of any of the invocations contained within this information, as well as the provided APIs, the use of a ProjectName, UrlBase, and an ApiKey requested beforehand is required.

For any inquiries or technical assistance required, the email channel for correspondence is soporte@ado-tech.com

- Welcome to B-Trust Documentation

- SDKS

- Android SDK Guide

- iOS SDK Guide

- JavaScript SDK Guide

- Liveness API Documentation

- SDK response update 3.0

- COMPLETE EXPERIENCIE SOLUTION

- Web Integration

- Classic Flow

- KYC Ecuador Flow

- KYC Ecuador + Document Capture Flow

- KYC Ecuador StartCompareFaces

- KYC Service Overview and Integration

- KYC Transaction Flow

- Single-use link

- API REFERENCE - CONFIGS

- API REFERENCE - EVENT TRACKER

- SIGNING DOCUMENTS

- Catalogs

- API REFERENCE - PROFILE

- API REFERENCE - PUSHDATA

- SDK Integration Full Flow

- ADO's Voice Screen

- ADO's Voice Screen

- Introduction to the Emotion Logic AI Platform

- About Layered Voice Analysis (LVA™)

- Emotion Logic platform's basics

- FeelGPT Advisors System

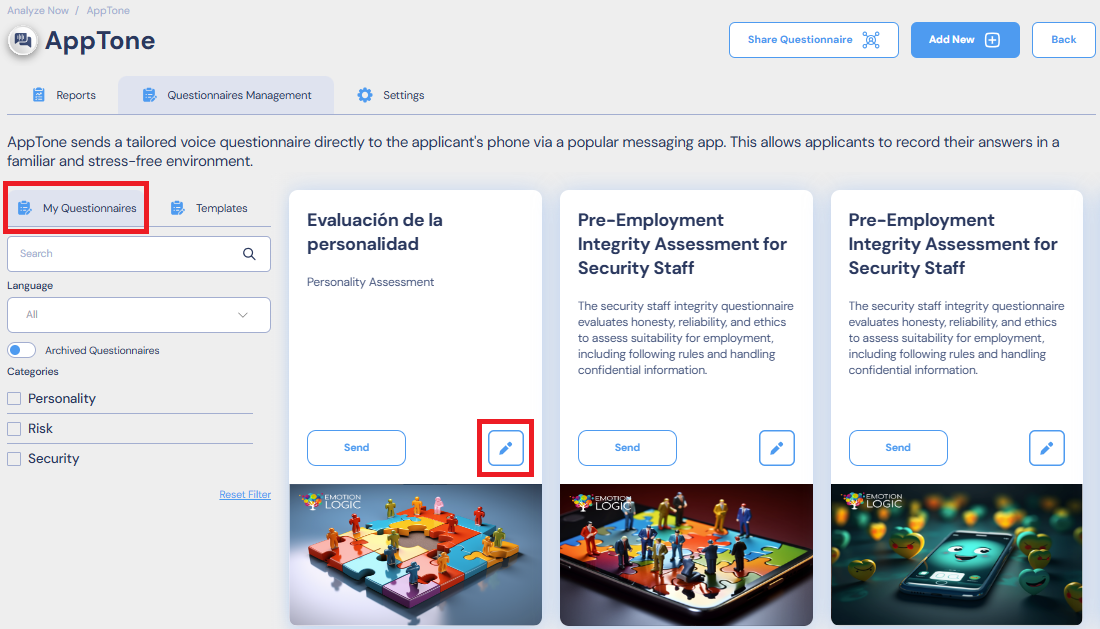

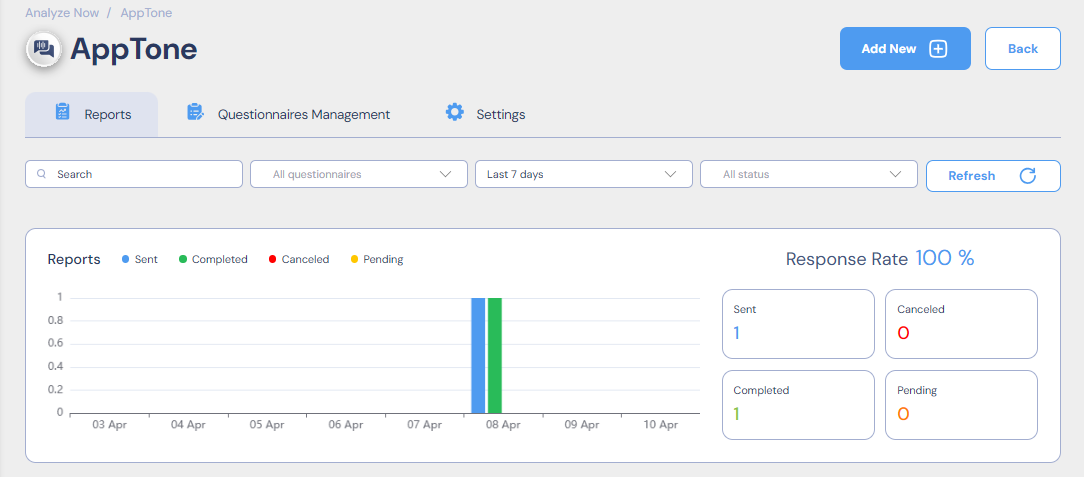

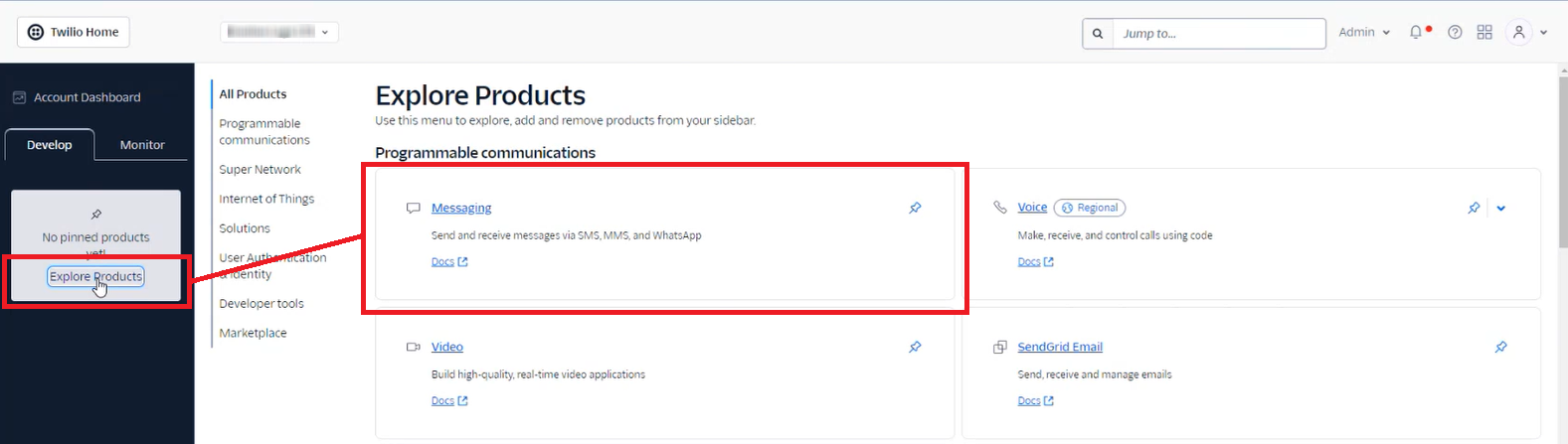

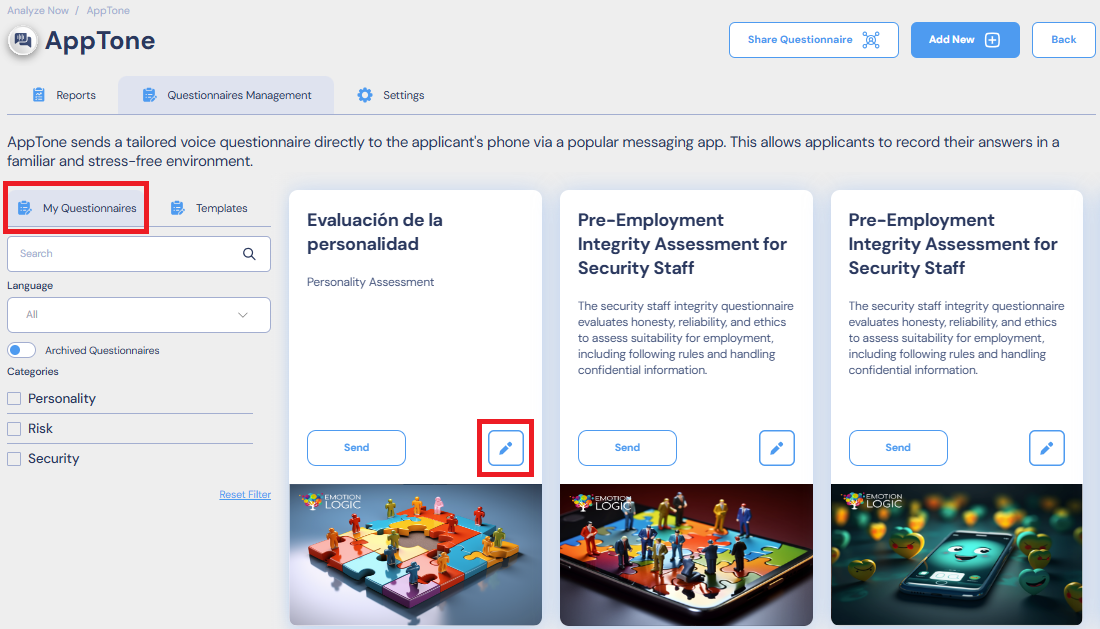

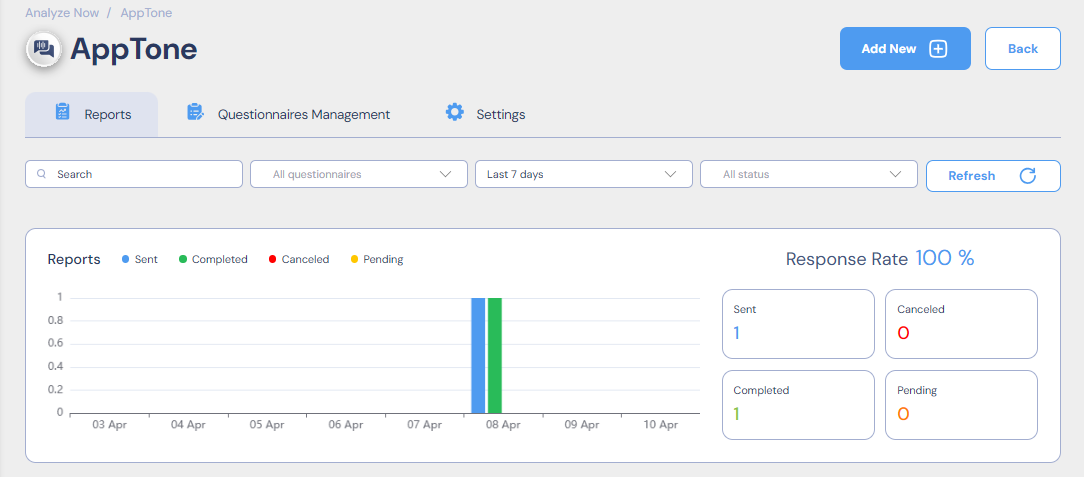

- AppTone Questionnaires System

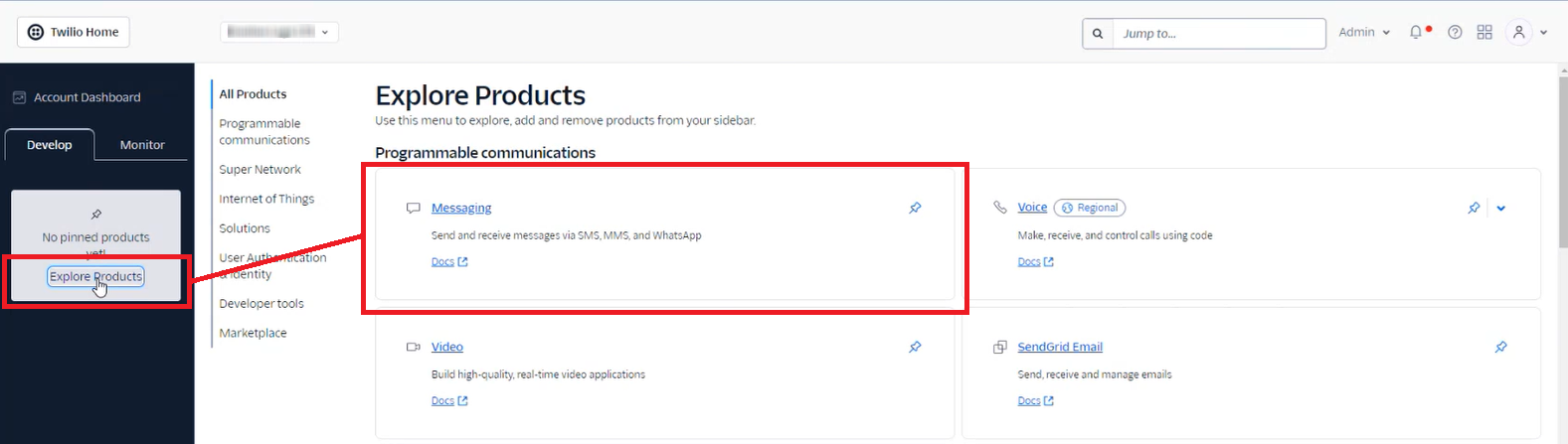

- Developer's zone

- Audio Analysis API

- API response examples

- Standard call center response sample

- Call center sales response sample

- Call center risk sample response

- API Error and warning codes

- "Analyze Now" APIs

- Obtaining advisor id

- AppTone Get Questionnaires List

- Docker installation and maintenance

- Real-time analysis (streaming)

- Sample code - avoid promises

- ADO's Voice Screen

- Página nueva

- API response examples

- Introduction to the Emotion Logic AI Platform

- About Layered Voice Analysis (LVA™)

- Emotion Logic platform's basics

- FeelGPT Advisors System

- AppTone Questionnaires System

- Developer's zone

- Audio Analysis API

- API response examples

- Standard call center response sample

- Call center sales response sample

- Call center risk sample response

- API Error and warning codes

- "Analyze Now" APIs

- Obtaining advisor id

- AppTone Get Questionnaires List

- Docker installation and maintenance

- Real-time analysis (streaming)

- Sample code - avoid promises

- CHANGELOG SDK JAVASCRIPT

- Manuales Técnicos

Welcome to B-Trust Documentation

Welcome to B-Trust Documentation

Welcome to the official documentation for B-Trust, your comprehensive solution for secure and efficient identity validation through facial biometric verification. B-Trust is designed to cater to a wide range of applications and industries, offering a flexible and robust platform for confirming identities with precision and ease.

What is B-Trust?

B-Trust represents the forefront of identity verification technology, combining advanced facial recognition algorithms with a suite of integration options to fit every client's needs. Whether you're looking to incorporate biometric verification directly into your mobile app, web application, or prefer a fully managed web experience, B-Trust provides the tools and flexibility necessary for seamless integration.

With B-Trust, you can

- Enhance Security: Utilize cutting-edge facial biometric technology to verify identities, reducing the risk of fraud and unauthorized access.

- Streamline User Experience: Offer your users a quick and effortless verification process, improving satisfaction and trust in your services.

- Adapt to Your Needs: Choose from our Android, iOS, or JavaScript SDKs for direct integration and use our comprehensive APIs to connect with the B-Trust platform. For those seeking a no-setup solution, our managed web experience handles the entire flow from start to finish.

Getting Started with B-Trust

Embarking on your journey with B-Trust's identity validation solutions begins here. This section is meticulously crafted to guide you through the initial setup and integration process, ensuring a smooth and efficient start. B-Trust's integration flexibility, through SDKs or a fully managed web experience, is complemented by a comprehensive suite of APIs. These APIs are integral to all integration paths, facilitating the seamless operation and enhanced functionality of your identity validation workflows.

Initial Steps

Before diving into the technical integration, let's start with the foundational steps to access B-Trust:

- Contact Our Sales Team: To get started with B-Trust, reach out to our sales team. They will guide you through our solutions, helping you choose the best fit for your needs.

- Account Setup and Credentials: Following your discussion with the sales team, they will set up your B-Trust account and provide you with the necessary credentials. These credentials are essential for accessing our SDKs, APIs, and the managed web experience.

Choosing Your Integration Path

With your account set up, it's time to decide how you'll integrate B-Trust into your system:

- SDKs (Libraries): For integrating directly into your mobile or web applications, our SDKs for Android, iOS, and JavaScript offer a native user experience. Utilize B-Trust APIs for data submission and verification results.

- Web Experience: For a quick and easy setup, direct users to our fully managed web page that handles the entire verification flow. This option is complemented by B-Trust APIs for a comprehensive integration.

Understanding B-Trust APIs

B-Trust APIs play a crucial role in all integration paths, facilitating the submission of biometric data, managing the verification process, and retrieving results. Familiarize yourself with our API documentation to fully leverage B-Trust's capabilities in your application or service.

API Documentation

Explore our detailed API documentation for information on endpoints, request/response formats, and practical use cases.

Welcome aboard! You're now on your way to implementing B-Trust's advanced identity validation solutions. Should you have any questions or need assistance, our dedicated support team and your sales representative are here to help.

Glossary

ENROLLMENT

The process through which the acquisition of images (facial photograph, front and back of the identity document), eventually fingerprints through external biometric captors, is performed in order to apply internal protocols for reviewing imprint-technical characteristics on the document, as well as comparing the facial features of the document contained within the document against the client's facial photograph at the time of submission.

VERIFICATION

The process through which the acquisition of a facial photograph and/or fingerprint is performed to compare it against those previously existing in the database for the identity in question and determine whether they belong to the same person or not. This process assumes and requires at least one previous successful enrollment before using this functionality.

OCR

Optical Character Recognition, allows the collection of images (front and back of the document) for the purpose of reading the information contained in the identity document presented by the client.

CLIENT

Natural or legal person who acquired the service with ADO and who signs the contract as the responsible party for the demanding part of the service.

END USER

Natural person on whom enrollment and/or OCR reading and/or verification are intended to be executed.

PROJECT NAME

Name created and managed from the ADO platform, which identifies a project within the platform provided by Ado Technologies SAS (hereinafter ADO), for the provision of the service. Within the same website, one or more projects may exist at the same time.

API KEY

Alphanumeric string created and managed from the ADO platform, which grants or denies access to services associated with each of the projects created within the platform.

BASE URL

It is the URL provided by ADO as a platform for the provision of the service, which defines the final interaction site of the SDK with a specific website.

Service Response Dictionary

The final rating of transactions is determined after the user passes through the biometric engine. This rating is found within the JSON object returned by our service, either through a callback or via a data push configuration. This object contains the "StateName" field, describing the final classification, and the "IdState" field, serving as the identification for this same rating. This process provides a precise and secure measure of the quality and validity of the transactions conducted, enabling us to understand the final outcome and how to handle them appropriately.

-

IdState: 1

- NameState: Pending

- Description: Applies to cases where some indication or alert is detected regarding the documents and/or their correspondence with the bearer. The case is referred to analysis by the BackOffice, and the definitive response will be delivered within the agreed times in the ANS.

-

IdState: 2

- NameState: Successful Process

- Description: Applies to images of authentic documents with uniprocedence facial (document vs selfie).

-

IdState: 4

- NameState: Authentic Document without Facial Comparison

- Description: Applies when the facial score does not exceed the approval threshold.

-

IdState: 5

- NameState: Erroneous Capture

- Description: The user captures partial images of low quality, which prevents a judgment until they are improved.

-

IdState: 6

- NameState: Invalid Document

- Description: Formats of documents not agreed upon in the service level agreements are provided.

-

IdState: 8

- NameState: Altered Document

- Description: Documents issued by the real issuing entity but present alterations or adulterations in one or more of their fields or technical characteristics.

-

IdState: 9

- NameState: False Document

- Description: Documents totally or partially produced outside the issuing entity.

-

IdState: 10

- NameState: Face does not correspond

- Description: Applies to cases where the facial score of a correspondence between the bearer and the content in the document does not reach the minimum defined as secure between the contrast and the contractor.

-

IdState: 11

- NameState: Fingerprints do not correspond

- Description: Applies to cases where the fingerprint score of a correspondence between the bearer and the content in the document does not reach the minimum defined as secure between the contrast and the contractor.

-

IdState: 14

- NameState: Previously Registered Person

- Description: Applies to cases where the face and/or identification number of the client were previously registered before the current attempt.

-

IdState: 15

- NameState: Error

- Description: Reserved for various communication, slowness, or unavailability errors between the SDK and the server.

-

IdState: 16

- NameState: Person found in control lists.

- Description: Reserved for matches in control lists for names and identification numbers.

-

IdState: 18

- NameState: Block

- Description: Reserved for blocking identification numbers for a defined period of time.

DocumentType

This document type is accepted by our service and is referenced by an integer ID. Before using it in any request, verify with Support which document type will be validated so we can enable it via the dashboard.

| ID | Nombre | País / Entidad |

|---|---|---|

| 1 | Cédula de ciudadanía | Colombia |

| 2 | PEP solo con Pasaporte | Colombia |

| 3 | Cédula de ciudadanía Ecuatoriana | Ecuador |

| 4 | Cédula de extranjería | Colombia |

| 5 | Tarjeta de identidad | Colombia |

| 6 | Card ID Israel | Israel |

| 7 | Cédula de ciudadanía Panameña | Panamá |

| 8 | Cédula de ciudadanía Peruana | Perú |

| 9 | Cédula de ciudadanía Paraguaya | Paraguay |

| 10 | INE México | México |

| 11 | Cédula de identidad Chilena | Chile |

| 12 | Identificación Puerto Rico | Puerto Rico (EE. UU.) |

| 13 | Cédula de identidad Costa Rica | Costa Rica |

| 14 | Documento personal de identificación Guatemala | Guatemala |

| 15 | Cédula Uruguaya | Uruguay |

| 16 | Cédula de Ciudadanía Boliviana | Bolivia |

| 17 | PPT (Permiso por Protección Temporal) | Colombia |

| 18 | Documento Nacional de Identidad (DNI) | España |

| 19 | Documento Nacional de Identidad (DNI) | Argentina |

| 20 | Pasaporte | Cualquier país |

| 21 | Cédula de Identidad y Electoral | República Dominicana |

Changelog Livennes

2.5

- Optimización general del flujo de captura y validación para mejorar estabilidad en sesiones consecutivas.

- Ajustes de rendimiento para reducir latencia en el retorno de resultados al host.

- Mejoras iniciales en reducción de evidencia (Base64) para disminuir tamaño de payload.

2.6.0

- Optimización del pipeline de cámara para mayor fluidez en dispositivos de gama media.

- Ajustes de compresión de evidencia para reducir transferencia y mejorar tiempos de entrega.

- Mejoras de experiencia (feedback más claro) durante el alineamiento y captura.

2.7.0

- Optimización del tracking facial para mayor estabilidad del rostro en escena.

- Mejoras de rendimiento en procesamiento de frames para reducir carga sostenida de CPU/GPU.

- Ajustes de lógica del flujo para mejorar consistencia entre captura, análisis y resultado.

2.8.0

- Optimización del retorno de evidencia al host: generación y codificación Base64 más eficiente.

- Reducción adicional del tamaño de imagen/evidencia manteniendo calidad de referencia.

- Mejoras en experiencia visual para mayor consistencia entre resoluciones y dispositivos.

2.9.0

- Optimización del arranque/cierre de cámara y recuperación del flujo en reintentos.

- Mejoras en consumo de memoria para evitar degradación de rendimiento tras múltiples ejecuciones.

- Ajustes de feedback al usuario para reducir fricción y aumentar claridad del proceso.

3.0.0

- Evolución mayor del SDK enfocada en rendimiento y estabilidad del ciclo de vida del componente.

- Optimización del pipeline de análisis para mayor fluidez y tiempos de respuesta más consistentes.

- Mejoras en el manejo de recursos (cámara/memoria) para sesiones prolongadas.

3.0.3

- Optimización del inicio de sesión de cámara y transición hacia el análisis para reducir tiempos de arranque.

- Ajustes de eficiencia para disminuir picos de CPU/GPU durante la inicialización.

3.0.8

- Mejoras en compresión y serialización de evidencia: Base64 más liviano y entrega más rápida al host.

- Optimización del flujo para reducir latencia entre captura y resultado final.

3.1.0

- Ajustes de lógica para mayor consistencia del flujo de validación.

- Optimización del uso de memoria en sesiones repetidas para aumentar estabilidad.

3.1.5

- Mejoras de UX: feedback más claro y consistente durante el proceso de captura.

- Optimización de rendimiento en dispositivos con recursos limitados para mantener fluidez.

3.2.0

- Optimización de reintentos sin reinicialización completa para mejorar continuidad del flujo.

- Ajustes de compresión de evidencia para reducir payload manteniendo calidad adecuada.

3.2.6

- Optimización del procesamiento de frames para disminuir carga sostenida de CPU/GPU.

- Mejoras de estabilidad del pipeline de cámara para continuidad del análisis.

3.3.0

- Mayor robustez del análisis facial ante variaciones de iluminación y movimientos moderados.

- Optimización de generación/codificación de evidencia para retorno más rápido al host.

- Ajustes de rendimiento para mantener FPS más estable durante la sesión.

3.3.7

- Optimización del tiempo de inicialización del módulo de cámara y preparación del análisis.

- Ajustes internos para una experiencia más estable en ejecuciones prolongadas.

3.4.0

- Optimización del tracking facial para mayor consistencia del rostro durante toda la sesión.

- Mejoras de rendimiento en compresión de evidencia (Base64) para reducir transferencia y tiempos.

- Ajustes de experiencia visual para consistencia en diferentes resoluciones y dispositivos.

3.4.6

- Optimización del uso de memoria y liberación de recursos al finalizar el flujo para mantener estabilidad.

- Mejoras de rendimiento en UI/Render: reducción de carga de estilos y mayor fluidez en transiciones.

3.5

- Fortalecimiento de seguridad: mejoras en detección y mitigación de spoofing IA mediante refinamiento de reglas y señales.

- Mejoras en deteccion con intentos de spoofing, enhancing y validaciones de video.

- Adición de señales para identificar modo de depuración (debug) y estado del dispositivo, fortaleciendo controles de integridad del entorno.

- Mejora del análisis de comportamiento: detección de patrones inusuales del usuario final (interacciones atípicas, intentos repetitivos y comportamiento anómalo).

- Optimización del ciclo de vida del componente (init → ejecución → cierre) para mayor estabilidad.

- Mejoras en arranque y aplicación de configuración para reducir tiempos de inicio y aumentar consistencia.

3.5.1

- Integración del nuevo motor de Liveness con detección avanzada de rostro y análisis de emociones/gestos para mayor precisión.

- Optimización del flujo de captura y validación para una ejecución más guiada, estable y consistente.

- Mejoras de diseño y experiencia (UI/UX) para mayor claridad de feedback al usuario.

- Optimización del pipeline de cámara y análisis para reducir latencia y acelerar retorno de evidencia al host.

- Mejora de seguridad: mayor robustez del análisis frente a manipulación del flujo y escenarios de presentación.

3.5.6

- Mejora del sistema de detección facial para reconocimiento de gestos (por ejemplo, sonrisa) y mayor precisión de detección de vida.

- Ajustes de rendimiento y experiencia: reducción/optimización de estilos y mejoras en fluidez del flujo.

- Optimización específica para Snapdragon 8 Elite: mayor rendimiento y precisión en detección de máscara facial y detección de vida.

- Fortalecimiento de señales anti-spoofing y validaciones de video para reducir riesgo de intentos de bypass.

- Mejoras de eficiencia en consumo de recursos durante sesión activa (mejor uso de CPU/GPU y estabilidad percibida).

3.5.7

- Optimización de inicialización e instanciación del componente para mayor estabilidad y consistencia de configuración.

- Mejoras de localización: aplicación consistente del idioma configurado y feedback alineado a la configuración del host.

- Ajustes de rendimiento adicionales para mejorar tiempos de respuesta y estabilidad general del flujo.

- Refinamiento de lógica de control para mejorar consistencia de estados, validaciones y entrega de resultados.

SDKS

Android SDK Guide

This guide provides detailed instructions for integrating the Scanovate Colombia SDK into your Android application, enabling robust identity validation processes through facial biometric verification.

Requirements and Compatibility

Before starting the integration process, ensure your development environment meets the following requirements:

- Android Studio: The latest version is recommended for optimal compatibility.

- Minimum SDK Version: Android SDK version 27 (Lollipop) or higher.

- Target SDK Version: Android SDK version 35 (Android 15) to ensure your app is compatible with the latest Android OS.

- Compile SDK Version: Android SDK version 35.

Installation

1. Add the library

Download the "hybridComponent_3_0_0_17.aar" library and add it to your project's libs folder. Ensure you configure your project's build.gradle file to include the library as a dependency:

dependencies {

implementation(name: 'hybridComponent_3_0_0_17', ext: 'aar')

}

2. Import Required Libraries

Add the following imports in your activity or fragment where you intend to use the Scanovate SDK:

Java

import mabel_tech.com.scanovate_sdk.ScanovateSDK;

import mabel_tech.com.scanovate_demo.HybridComponent;

import mabel_tech.com.scanovate_sdk.SdkResultHandler;

import mabel_tech.com.scanovate_sdk.data.model.ComponentCloseResult;

The CloseResponse object will contain the results of the transaction, providing detailed feedback on the validation process.

Implement in app/build.gradle:

dependencies {

implementation(files("libs/hybridComponent_3_0_0_17.aar"))

// Dependencies required by the SDK

implementation(platform("androidx.compose:compose-bom:2026.02.00"))

implementation("androidx.compose.ui:ui")

implementation("androidx.compose.material3:material3:1.5.0-alpha14")

implementation("androidx.activity:activity-compose:1.12.4")

implementation("androidx.navigation:navigation-compose:2.7.7")

implementation("androidx.lifecycle:lifecycle-viewmodel-compose:2.8.0")

implementation("androidx.lifecycle:lifecycle-runtime-compose:2.8.0")

implementation("androidx.security:security-crypto:1.1.0-alpha06")

implementation("com.squareup.retrofit2:retrofit:2.9.0")

implementation("com.squareup.retrofit2:converter-gson:2.9.0")

implementation("com.squareup.okhttp3:okhttp:4.12.0")

implementation("com.google.code.gson:gson:2.10.1")

implementation("com.google.accompanist:accompanist-permissions:0.34.0")

implementation("com.google.android.gms:play-services-location:21.3.0")

implementation("org.jetbrains.kotlinx:kotlinx-coroutines-play-services:1.8.1")

implementation("com.airbnb.android:lottie-compose:6.4.0")

}Permissions

The SDK declares the necessary permissions in its own manifest. These are automatically merged upon compilation. Manual declaration is not required.

<uses-permission android:name="android.permission.INTERNET" />

<uses-permission android:name="android.permission.ACCESS_NETWORK_STATE" />

<uses-permission android:name="android.permission.CAMERA" />

<uses-permission android:name="android.permission.VIBRATE" />

<uses-permission android:name="android.permission.ACCESS_COARSE_LOCATION" />

<uses-permission android:name="android.permission.ACCESS_FINE_LOCATION" />

<uses-permission android:name="android.permission.WAKE_LOCK" />

Example Implementation

For a practical example of how to implement the Scanovate SDK in your Android application, refer to the following steps:

- Setup UI Elements: Initialize buttons, text views, and other UI elements in your activity's

onCreatemethod. This setup includes buttons for starting the enrollment and verification processes, a text view for displaying results, and an edit text for user input. - Invoke the SDK: Use the

HybridComponent.startmethod to launch the Scanovate SDK. This method requires several parameters, including language, project name, API key, product ID, and the SDK URL. It also allows you to specify the type of capture (e.g., liveness detection, document capture) and whether to capture the front or back side of a document. - Handle Callbacks: Implement

ScanovateHandlerto manage success and failure callbacks. On success, process theCloseResponseobject to display the transaction result. On failure, handle errors accordingly.

Example

// Example capture method implementationScanovateSDK.INSTANCE.start( this, // Activity "1", // documentType (String, número > 0) "es", // language ("es" o "en") "miProyecto", // projectNameSdk "mi-api-key", // apiKeySdk 1, // productId (int > 0) "https://servidor.com/miProyecto/api/", // urlSdk "https://tracer.com/api/EventTracer/", // urlTracerBackendService (opcional, "" si no aplica) "", // processId (opcional) 1, // functionCapture: 1 = Liveness, 2 = CardCapture true, // isFrontSide: true = frente, false = reverso "token", // token (opcional, "" si no aplica) "", // uidDevice (opcional, el SDK genera uno si está vacío) new SdkResultHandler() { @Override public void onSuccess(ComponentCloseResult result) { // Captura exitosa int statusCode = result.getStatusCode(); String message = result.getMessage(); boolean isAlive = result.isAlive(); String image = result.getImage(); // Base64 String keyProcess = result.getKeyProcessLiveness(); String uid = result.getUidDevice(); } @Override public void onFailure(ComponentCloseResult result) { // Error o cancelación int statusCode = result.getStatusCode(); String message = result.getMessage(); } } );

Parameters Explained

- language: Sets the language for the SDK's UI.

- projectName: Unique identifier for your project.

- apiKey: Authentication key provided by Scanovate.

- productId: Identifies the specific Scanovate product/service being used.

- sdkUrl: The base URL for making API calls to the Scanovate services.

- Url_TracerBackendServices: Url for the event reporting service is not required and is only an extra service. (Optional)

- ImmersiveMode: Mode to make the component consume all available space while hiding the system UI.

- Process_ID: Process identifier to perform the events mapped at the SDK level. (Optional)

- functionCapture: Specifies the operation mode of the SDK.

- documentSide: Determines which side of the document to capture.

- additionalParameters: Allows for passing any additional required parameters.

- completionHandler: Closure that handles the response or error from the SDK.

Process Transaction Results

After capturing the necessary data, use the RetrofitClient to send the data for validation and display the final state of the transaction to the user.

State Codes Reference

Be aware of the following state codes when processing responses:

200: "SUCCESS"201: "THE_NUMBER_OF_CONFIGURED_ATTEMPTS_WAS_EXCEEDED_AND_NO_LIFE_WAS_FOUND_IN_THESE"203: "TIMEOUT"302: "INTERNAL_ERROR"204: "CANCELED_PROCED"205: "PERMISSIONS_DENIED"401: "TOKEN_ERROR"404: "INVALID_CREDENTIALS"503: "CONNECTION_ERROR"

This guide aims to streamline the integration process of the Scanovate Colombia SDK into your Android application, ensuring you can efficiently implement a robust identity validation system.

Demo Application

For a comprehensive example, including full source code demonstrating the integration and usage of the Scanovate Colombia SDK, visit our GitHub repository:

Scanovate Colombia SDK Demo App For Android

This demo app provides a hands-on example to help you understand how to integrate and utilize the SDK in your own applications.

iOS SDK Guide

This guide outlines the steps for integrating the SMSDK framework into your iOS application, enabling identity validation processes through facial biometric verification or document scanning.

Installation

-

Add the library

- Download the "SMSDK.xcframework" file.

- In your Xcode project, navigate to the target's general settings.

- Go to the "Frameworks, Libraries, and Embedded Content" section.

- Click the "+" button and add the "SMSDK.xcframework" to your project. Ensure it's set to "Embed & Sign".

-

Import Required Libraries

In the file where you plan to use the SDK, import the necessary libraries:

swift

import UIKit

import AdoComponent

The TransactionResponse object will contain the results of the transaction, providing detailed feedback on the validation process.

Minimum SDK Version for iOS

Update the minimum iOS version to iOS 11.0:

Example Implementation

To initiate the SMSDK framework, use the initWith method from the SMManager class. This method requires a delegate and an SMParams object containing the launch parameters. Implement the SMDelegate extension to handle the SDK's response.

Intialization

let params = SMParams(productId: "1",

projectName: "lulobankqa",

apiKey: "db92efc69991",

urlSdk: "https://adocolumbia.ado-tech.com/lulobankqa/api/",

token: "",

function: 1, // 1 for Liveness, 2 for Document Scanning

isFrontSide: false, // true for front, false for back of the document

uidDevice: "",

language: "en") // "en" for English, "es" for Spanishlet smManagerVC = SMManager.initWith(delegate: self, params: params)

smManagerVC.modalPresentationStyle = .fullScreen

present(smManagerVC, animated: true, completion: nil)// MARK: - SMDelegate

extension ViewController: SMDelegate {

func completedWithResult(result: Bool, response: ResultsResponse?) {

dismiss(animated: true) {

// Handle the SDK response here

}

}

}

Parameters Explained

- productId: Identifier for the product being used.

- projectName: Your project identifier provided by the service.

- apiKey: Your API key for authentication with the service.

- urlSdk: The base URL for the SDK's services.

- token: Optional token for additional authentication (if required).

- function: Determines the operation mode (e.g., 1 for Liveness, 2 for Document Scanning).

- isFrontSide: Indicates which side of the document to capture.

- uidDevice: A unique identifier for the device.

- language: Specifies the language for the SDK interface.

Resources

Resource files, including animations provided by the client, can be found at the following path within your project:

SMSDKTest/Resources/Animations

Ensure these resources are correctly integrated into your project for the SDK to function as intended.

State Codes Reference

Be aware of the following state codes when processing responses:

200: "SUCCESS"201: "THE_NUMBER_OF_CONFIGURED_ATTEMPTS_WAS_EXCEEDED_AND_NO_LIFE_WAS_FOUND_IN_THESE"203: "TIMEOUT"204: "CANCELED_PROCED"205: "PERMISSIONS_DENIED"401: "TOKEN_ERROR"404: "INVALID_CREDENTIALS"500: "CONNECTION_ERROR"

Demo Application

For a comprehensive example, including full source code demonstrating the integration and usage of the Scanovate Colombia SDK, visit our GitHub repository:

Scanovate Colombia SDK Demo App For iOS

This demo app provides a hands-on example to help you understand how to integrate and utilize the SDK in your own applications.

JavaScript SDK Guide

⚠️¡CAMBIOS!⚠️

A partir de ahora, el archivo ComponentsManager.js dejará de cargarse localmente y se recomienda utilizar el CDN oficial de ADO Tech para una mejor gestión de versiones, mejoras de rendimiento y actualizaciones automáticas.

Se reemplaza la importación:

<script type="text/javascript" src="Assets/scanovate_card_capture/script.js"></script>

<script type="text/javascript" src="Assets/ComponentsManager.js"></script>por:

<script type="text/javascript" src="https://cdn-js.ado-tech.com/latest/ComponentsManager.js"></script>El uso de latest garantizará que siempre se utilice la última versión disponible, que actualmente abarca desde la 1.0 hasta la 2.5.

IMPORTANTE: Se le debe proporcionar a ADO un listado de dominios de los cuales se consumirá el cdn para agregar a listas blancas y poder consumir el servicio

Integrating ADO Technologies' JavaScript SDK into your web application enables you to leverage advanced identity verification features, such as Liveness Detection and Document Capture. This guide provides a structured approach to seamlessly incorporate these functionalities, enhancing the security and user experience of your platform.

Overview

The ADO Technologies JavaScript SDK offers a comprehensive suite of tools designed for real-time identity verification. By integrating this SDK, you can authenticate users by capturing their facial features and identification documents directly within your web application. This process is streamlined and user-friendly, ensuring a high level of accuracy in identity verification.

Requirements

Before starting the integration, ensure you have:

- Access to ADO Technologies' JavaScript SDK url.

- The API key and project name provided by ADO Technologies.

- A clear understanding of the specific features (e.g., Liveness Detection, Document Capture) you wish to implement.

Integration Steps

-

Include SDK and Assets: Incorporate the JavaScript SDK and related assets into your web project. This involves linking to the SDK's script files and CSS for styling.

-

Configure SDK Parameters: Set up the necessary parameters for the SDK, including the base URL, project name, API key, and product ID. These parameters are crucial for initializing the SDK and ensuring it functions correctly within your application.

-

Implement User Interface: Design and implement the user interface through which users will interact with the identity verification features. This includes input fields for configuration parameters and buttons to initiate the capture process.

-

Capture Process: Utilize the SDK's functions to capture facial images or documents based on the user's selection. This process should be intuitive, with clear instructions provided to the user.

-

Handle Responses: Implement logic to handle the SDK's responses, including success and error callbacks. Display the results appropriately within your application, ensuring users are informed of the outcome.

-

Testing and Validation: Thoroughly test the integration to ensure the identity verification process works as expected. Pay special attention to user experience, ensuring the process is smooth and intuitive.

Parameters

To initialize the ADO Technologies JavaScript SDK for identity verification within your web application, you'll need to configure several key parameters. These parameters are essential for tailoring the SDK's functionality to your specific needs and ensuring the verification process operates correctly. Below is an explanation of each parameter required for initialization:

-

UrlBase: The base URL of the ADO Technologies service. This URL is the entry point for all SDK requests and should be provided by ADO Technologies. It determines where the SDK sends its verification requests.

-

ProjectName: The name of your project as registered with ADO Technologies. This parameter helps the service identify which client is making the request, ensuring that the verification process is correctly attributed and logged.

-

ApiKey: A unique key provided by ADO Technologies that authenticates your application's requests. The API key is crucial for securing communication between your application and the ADO Technologies service, preventing unauthorized access.

-

ProductId: An identifier for the specific product or service you're using from ADO Technologies. This could relate to different types of verification services offered, such as Liveness Detection or Document Capture.

-

functionCapture: Determines the type of capture process to be initiated. This parameter allows you to specify whether you're performing Liveness Detection, Document Capture, or other supported verification processes. The options are typically represented as numerical values or specific strings defined by the SDK.

-

IsFrontSide: A boolean parameter indicating whether the document capture (if applicable) should focus on the front side of the identification document. This is relevant for services that require document images as part of the verification process.

-

UidDevice: A unique identifier for the device being used to perform the verification. This can be useful for logging, analytics, and ensuring that verification attempts are uniquely associated with a specific device.

-

Token: An optional parameter that may be required for additional authentication or session management purposes. If your verification process involves multiple steps or requires maintaining a session state, this token can be used to manage that state across requests.

-

ProcessId: An identifier for the specific verification process instance. This can be used to track the progress of a verification attempt or to retrieve results after the process has been completed (How to generate the process Id).

These parameters are typically set by assigning values to the corresponding input fields or variables within your web application's frontend code. Once configured, these parameters are passed to the SDK's initialization function, which prepares the SDK for the capture and verification process based on the provided configuration.

It's important to handle these parameters securely, especially those that could be sensitive, such as the ApiKey and Token. Ensure that your application's frontend and backend architecture support secure transmission and storage of these values.

Example Implementation

Below is an example HTML structure demonstrating how to set up the SDK in your web application. This example includes the SDK and asset links, configuration inputs, and the capture initiation button.

<!DOCTYPE html>

<html lang="en">

<head>

<meta charset="UTF-8">

<meta name="viewport" content="width=device-width, initial-scale=1.0, user-scalable=0, minimal-ui">

<title>Demo ADO Components</title>

<script type="text/javascript" src="https://cdn-js.ado-tech.com/latest/ComponentsManager.js"></script>

<link rel="stylesheet" href="Assets/scanovate_card_capture/assets/main.css">

<link rel="stylesheet" href="Assets/scanovate_card_capture/assets/loader.css">

</head>

<body>

<!-- Configuration and Capture UI omitted for brevity --><script>

function InitCapture() {

// Capture initialization logic and callbacks

}

</script>

</body>

</html>

This structure is a starting point for integrating the SDK. Customize the configuration and UI according to your application's needs and the specific features you plan to use.

By following this guide, you can effectively integrate ADO Technologies' JavaScript SDK into your web application, enabling robust identity verification functionalities that enhance the security and user experience of your platform.

Liveness API Documentation

Introduction

The Liveness API provides access to biometric liveness detection results and reporting capabilities. This documentation focuses on two key endpoints: retrieving liveness results and generating reports.

Important Contact Information: For information about this API and other solutions in our catalog, please contact our financial area for evaluation at julian@ado-tech.com. All access keys, endpoint URLs, and other access elements will only be provided after reaching a formal agreement between both entities.

Important Note

The liveness detection process requires integration with components from https://docs.ado-tech.com/books/b-trust/chapter/sdks. These components have associated costs and service agreements that must be discussed with the finance department before implementation.

API Endpoints

1. Get Results

Retrieves the results of a previously executed liveness verification process.

Endpoint: POST {base_url}/api/images/getResults

Request Body:

{

"idTransaction": "process_id",

"user": "your_username",

"password": "your_password",

"apiKey": "your_api_key",

"transactionNumber": "process_id"

}

Response: The API returns detailed liveness verification results, including:

{

"referenceNumber": "a7112314-f8c6-40b9-a5de-ab91fa98e3bc",

"score": 0.8818287,

"quality": 0.8818287,

"probability": 0.9878632,

"threshold_probabillity": 0.6,

"threshold_quality": 0.5,

"isAlive": true,

"isFraud": false,

"image": "/ ",

"videoBase64": " "

}

Key Response Fields:

referenceNumber: Unique identifier for the verification resultscore: Overall liveness scorequality: Image quality scoreprobability: Probability that the subject is alivethreshold_probabillity: Minimum probability threshold for positive verificationthreshold_quality: Minimum quality threshold for acceptable imagesisAlive: Boolean indicating liveness detection resultisFraud: Boolean indicating potential fraud detectionimage: Base64 encoded image (abbreviated in example)videoBase64: Base64 encoded video if applicable (abbreviated in example)

Note: The key process liveness ID required for this endpoint is obtained from the B-Trust SDK components. Access to these components requires proper licensing and authorization.

2. Generate Report

Generates a comprehensive report of liveness verifications for a specific project and date range.

Endpoint: POST {base_url}/api/images/Report

Request Body:

{

"IntialDate": "2024-01-01T00:00:00.000Z",

"EndDate": "2024-02-01T23:59:59.999Z",

"projectId": "your_project_id"

}

Important Considerations:

- It is recommended to request reports spanning only 1-2 months at a time for optimal performance

- The

projectIdmust match the assigned project identifier from your service agreement - Date formats must follow ISO 8601 standard (YYYY-MM-DDThh:mm:ss.sssZ)

Response: The API will return a comprehensive report of liveness verification transactions within the specified date range for the given project.

Additional Services

For more advanced biometric verification needs, the following solutions are available:

- Compare Face: Validates and compares facial images

- Validar rostro-persona: Verifies that a face belongs to a specific person

- Validar rostro-documento: Validates a face against identification document photos

These additional services can be integrated with the liveness detection process to create a complete identity verification solution. Each component returns useful data for integration with the liveness verification workflow.

Service Acquisition

Our catalog contains numerous additional routines and services for biometric verification and identity validation. For more information about all available services, pricing, and implementation:

- Please contact our financial area at: julian@ado-tech.com

- All access elements including API keys, endpoint URLs, and credentials will only be provided after a formal agreement is reached between both entities

- Integration support is available after service contracts are finalized

Integration Considerations

- Proper error handling is essential for all API calls

- Credentials must be securely stored and transmitted

- Results should be evaluated against your specific security threshold requirements

- Integration with the B-Trust SDK components requires proper licensing and configuration

By leveraging these endpoints, you can access liveness verification results and generate comprehensive reports for your biometric verification processes.

SDK response update 3.0

As part of the SDK update, starting now we will generate standardized response objects, including the full detail of fields and their possible values.

Additionally, we improved the tagging logic to correctly detect icons and related events. With these improvements, when a spoofing-related event is detected, a transaction will be generated and the event will be recorded with its corresponding details.

If spoofing is detected, an evidence image will also be sent to the customer-provided webhook. Moreover, from now on the transaction details (including the transaction number) will be delivered through the webhook to ensure an auditable record of the event.

Expected response: Transaction details sent to the webhook.

SDK response level (PSA): This information will be returned by the SDK as part of the standardized response object.

{

"processType": "liveness",

"statusCode": 1,

"detail": {

"status": "approved",

"reason": {

"name": "NOT_ALERT",

"description": "The transaction was approved."

},

"uidDevice": "1da62007d8974a9fa71e18cba76f6fc1",

"keyProcessLiveness": "69c265aeca9d4863b61b1fc6a8099f52",

"processId": "3f2b9c1e-7a4d-4f2a-9c0c-6c4c2e9b8d12",

"image": {

"encoding": "base64",

"mimeType": "image/jpeg",

"data": "<BASE64>"

}

}

}Field description

The component’s possible responses are split by functionality: CardCapture (document capture) or Liveness (proof of life). Below is a practical, complete list of outcomes you should handle (success, pending, failures, cancellations, and technical errors).

Liveness

| Status (code) | Nombre (name) | Descripción (description) | Objeto (reason) |

|---|---|---|---|

NONE |

NOT_ALERT |

The transaction was approved with no alerts detected. | {"code":"NONE","name":"NOT_ALERT","description":"The transaction was approved with no alerts detected."} |

NOT_ALIVE |

Not alive detected |

The liveness check indicates the subject is not alive. | {"code":"NOT_ALIVE","name":"Not alive detected","description":"The liveness check indicates the subject is not alive."} |

CLOSED_EYES |

Eyes closed detected |

The subject's eyes were detected as closed during the capture. | {"code":"CLOSED_EYES","name":"Eyes closed detected","description":"The subject's eyes were detected as closed during the capture."} |

SPOOFING |

Spoofing detected |

A potential spoofing attempt was detected during the liveness process. | {"code":"SPOOFING","name":"Spoofing detected","description":"A potential spoofing attempt was detected during the liveness process."} |

PAD |

Presentation attack detected (PAD) |

A potential presentation attack was detected (PAD confidence triggered). | {"code":"PAD","name":"Presentation attack detected (PAD)","description":"A potential presentation attack was detected (PAD confidence triggered)."} |

PERMISSION_DENIED |

Camera permission denied |

Camera access was denied by the user or the operating system. | {"code":"PERMISSION_DENIED","name":"Camera permission denied","description":"Camera access was denied by the user or the operating system."} |

CAMERA_NOT_FOUND |

Camera not found |

No camera was detected on the device, or it is unavailable. | {"code":"CAMERA_NOT_FOUND","name":"Camera not found","description":"No camera was detected on the device, or it is unavailable."} |

FACE_NOT_DETECTED |

Face not detected |

No face was detected in the camera frame. | {"code":"FACE_NOT_DETECTED","name":"Face not detected","description":"No face was detected in the camera frame."} |

NETWORK_ERROR |

Network error |

A network issue prevented the process from completing. | {"code":"NETWORK_ERROR","name":"Network error","description":"A network issue prevented the process from completing."} |

SDK_ERROR |

SDK error |

An internal SDK error occurred during the process. | {"code":"SDK_ERROR","name":"SDK error","description":"An internal SDK error occurred during the process."} |

USER_CANCELED |

User canceled |

The user canceled the process before completion. | {"code":"USER_CANCELED","name":"User canceled","description":"The user canceled the process before completion."} |

Example:

As an example response, the JSON below represents a rejected transaction (Status 2).

The reason field may come as an object, including the rejection reason details and its description.

It also includes identifiers such as uidDevice and keyProcessLiveness, plus processId (the unique process UID).

Evidence (e.g., an image) can be returned as Base64, including the encoding type.

{

"processType": "liveness",

"statusCode": 2,

"detail": {

"status": "rejected",

"reason": {

"name": "SPOOFING",

"description": "The liveness check indicates the subject is not alive."

},

"uidDevice": "1da62007d8974a9fa71e18cba76f6fc1",

"keyProcessLiveness": "69c265aeca9d4863b61b1fc6a8099f52",

"processId": "3f2b9c1e-7a4d-4f2a-9c0c-6c4c2e9b8d12",

"image": {

"encoding": "base64",

"mimeType": "image/jpeg",

"data": "<BASE64>"

}

}

}CardCapture

| Status (code) | Nombre (name) | Descripción (description) | Objeto (reason) |

|---|---|---|---|

NONE |

NOT_ALERT |

The transaction was approved with no alerts detected. | {"code":"NONE","name":"NOT_ALERT","description":"The transaction was approved with no alerts detected."} |

PERMISSION_DENIED |

Camera permission denied |

Camera access was denied by the user or the operating system. | {"code":"PERMISSION_DENIED","name":"Camera permission denied","description":"Camera access was denied by the user or the operating system."} |

CAMERA_NOT_FOUND |

Camera not found |

No camera was detected on the device, or it is unavailable. | {"code":"CAMERA_NOT_FOUND","name":"Camera not found","description":"No camera was detected on the device, or it is unavailable."} |

CAMERA_IN_USE |

Camera in use |

The camera is currently being used by another application. | {"code":"CAMERA_IN_USE","name":"Camera in use","description":"The camera is currently being used by another application."} |

NOT_SUPPORTED |

Device not supported |

The device does not meet the requirements for this capture process. | {"code":"NOT_SUPPORTED","name":"Device not supported","description":"The device does not meet the requirements for this capture process."} |

DOCUMENT_NOT_DETECTED |

Document not detected |

No document was detected in the camera frame. | {"code":"DOCUMENT_NOT_DETECTED","name":"Document not detected","description":"No document was detected in the camera frame."} |

NETWORK_ERROR |

Network error |

A network issue prevented the process from completing. | {"code":"NETWORK_ERROR","name":"Network error","description":"A network issue prevented the process from completing."} |

SDK_ERROR |

SDK error |

An internal SDK error occurred during the process. | {"code":"SDK_ERROR","name":"SDK error","description":"An internal SDK error occurred during the process."} |

USER_CANCELED |

User canceled |

The user canceled the process before completion. | {"code":"USER_CANCELED","name":"User canceled","description":"The user canceled the process before completion."} |

FOCUS_LOST |

Focus lost / component interrupted |

The process was interrupted because the app lost focus or the component was closed, minimized, or moved to the background. | {"code":"FOCUS_LOST","name":"Focus lost / component interrupted","description":"The process was interrupted because the app lost focus or the component was closed, minimized, or moved to the background."} |

Example:

For the document capture process, it will be mapped under processType: "cardCapture" and will use statusCode: 3, which groups any cancellation scenario that affects the execution of the flow, for example:

-

Process cancelled by the user.

-

Component focus loss during the session (e.g., the app goes to the background or the user navigates to another screen).

The response will include the reason (cancellation reason) and status (state), as well as the processId (unique process identifier) and its linked ID (if applicable). Additionally, the imageDetail object will be returned, where the result of the processed image will be provided.

{

"processType": "cardcapture",

"side":"front",

"statusCode": 3,

"detail": {

"status": "failed",

"reason": {

"name": "USER_CANCELED",

"description": "The user canceled the process before completion."

},

"uidDevice": "1da62007d8974a9fa71e18cba76f6fc1",

"processId": "3f2b9c1e-7a4d-4f2a-9c0c-6c4c2e9b8d12",

"image": {

"encoding": "base64",

"mimeType": "image/jpeg",

"data": "<BASE64>"

}

}

}This process will trigger an event to the webhook, where a record will be registered and linked by a UID (for example, processId as the unique process identifier). The relationship between the transaction and the webhook event will be kept for traceability.

The payload sent will follow the structure below. This response only applies to the webhook when reasonCode is one of the following values: SPOOFING, PAD, CLOSED_EYES, NOT_ALIVE.

{

"transactionId": "10743",

"processId": "3f2b9c1e-7a4d-4f2a-9c0c-6c4c2e9b8d12",

"transactionStatus": "rejected",

"stages": [

{

"processType": "liveness",

"status": “Spoofing”,

"reason": {

"name": "SPOOFING",

"description": "A potential spoofing attempt was detected during the liveness process."

},

"uidDevice": "1da62007d8974a9fa71e18cba76f6fc1",

"keyProcessLiveness": "69c265aeca9d4863b61b1fc6a8099f52",

"processId": "3f2b9c1e-7a4d-4f2a-9c0c-6c4c2e9b8d12"

}

]

}COMPLETE EXPERIENCIE SOLUTION

Web Integration

In today's digital age, ensuring the authenticity of user identities is paramount for online platforms, especially for services requiring a high level of security and trust. The Full Experience Integration offers a comprehensive solution by seamlessly incorporating identity validation processes directly into your web application. This guide introduces the concept of redirecting users to a dedicated web page for either ENROLL or VERIFY flows, providing a complete, secure, and user-friendly experience for identity verification.

Why Full Experience Integration?

Integrating the Full Experience for identity validation directly into your web application has several key benefits:

- Enhanced Security: By utilizing advanced biometric verification and document authentication, you significantly reduce the risk of identity fraud and enhance the overall security of your platform.

- Improved User Experience: Users appreciate a seamless and efficient process for identity verification. Redirecting to a dedicated web page simplifies the user journey, making it straightforward and hassle-free.

- Flexibility and Ease of Integration: Whether through GET or POST methods, redirecting users to a web page for identity verification offers flexibility in integration, allowing you to maintain the look and feel of your application while leveraging robust verification processes.

- Scalability: As your platform grows, the need for a reliable and scalable identity verification solution becomes crucial. The Full Experience Integration is designed to scale with your needs, ensuring consistent performance and reliability.

The ENROLL and VERIFY Flows

The Full Experience Integration encompasses two primary flows:

- ENROLL Flow: A comprehensive identity validation process that includes liveness detection, document scanning and authentication, OCR for data extraction, biometric extraction and comparison, and secure data association and storage. This flow establishes a verified biometric profile linked to the user's identity document.

- VERIFY Flow: A streamlined process that verifies an individual's identity by comparing live biometric data against the previously created biometric profile during the ENROLL process. This flow ensures that the person accessing the service is the same individual who initially enrolled.

Implementing the Integration

Integrating these flows into your web application involves redirecting users to a specific URL for either the ENROLL or VERIFY process. This redirection can be achieved using GET or POST methods, depending on your application's requirements and the specific parameters of the identity verification process. The URL includes all necessary parameters to initiate the verification process, such as API keys, project names, product numbers, and any additional custom parameters required for the transaction.

This guide aims to provide you with the knowledge and tools needed to implement the Full Experience Integration for identity verification within your web application. By following the outlined steps and understanding the importance of each flow, you can enhance the security and user experience of your platform, ensuring a trustworthy and efficient identity verification process.

Classic Flow

Integrating the Full Experience for identity verification into your web application involves redirecting users to a dedicated web page where they can complete the ENROLL or VERIFY process. This tutorial will guide you through the steps to implement these flows, ensuring a seamless integration that enhances user experience and security.

Requirements and Compatibility

Before you begin, ensure you have the following:

- Access to the base URL for the identity verification service.

- An API key and project name provided by the service provider.

- Knowledge of the product number associated with the service you intend to use.

- Familiarity with GET and POST HTTP methods.

Preparing the Redirection URLs

Based on the flow you wish to implement (ENROLL or VERIFY), prepare the URL to which users will be redirected. The URL structure differs slightly between the two flows:

ENROLL

GET Method: Construct the URL with all required parameters appended as query strings.

POST Method: If using POST, you'll need to set up a form or a web request in your application that submits to the URL https://your-base-url/validar-persona/ with the parameters included in the body of the request.

<form action="https://your-base-url/validar-persona/" method="post" target="_blank">

<input type="hidden" name="callback" value="YOUR_CALLBACK_URL" />

<input type="hidden" name="key" value="YOUR_API_KEY" />

<input type="hidden" name="projectName" value="YOUR_PROJECT_NAME" />

<input type="hidden" name="product" value="YOUR_PRODUCT_NUMBER" />

<input type="hidden" name="Parameters" value='YOUR_CUSTOM_PARAMETERS' />

<input type="hidden" name="riskId" value="YOUR_RISK_ID" />

<button type="submit">Start ENROLL Process</button>

</form>

Replace placeholders like YOUR_CALLBACK_URL, YOUR_API_KEY, etc., with actual values provided by the identity verification service. The Parameters field should contain a JSON string with any additional information you wish to pass.

VERIFY

GET Method: Similar to ENROLL, but with parameters suited for verification.

POST Method: Submit to https://your-base-url/verificar-persona/ with verification parameters in the request body.

<form action="https://your-base-url/verificar-persona/" method="post" target="_blank">

<input type="hidden" name="callback" value="YOUR_CALLBACK_URL" />

<input type="hidden" name="key" value="YOUR_API_KEY" />

<input type="hidden" name="projectName" value="YOUR_PROJECT_NAME" />

<input type="hidden" name="documentType" value="DOCUMENT_TYPE" />

<input type="hidden" name="identificationNumber" value="IDENTIFICATION_NUMBER" />

<input type="hidden" name="product" value="YOUR_PRODUCT_NUMBER" />

<input type="hidden" name="riskId" value="YOUR_RISK_ID" />

<input type="hidden" name="searchOneToMany" value="true_or_false" />

<input type="hidden" name="getGeolocationOption" value="GEOLOCATION_OPTION" />

<input type="hidden" name="hideTips" value="true_or_false" />

<button type="submit">Start VERIFY Process</button>

</form>

Again, ensure that you replace placeholders with actual values relevant to your project and the identity verification service. The searchOneToMany, getGeolocationOption, and hideTips fields are optional and should be included based on your specific requirements.

Redirecting Users

Implement the logic in your web application to redirect users to the prepared URL when they need to complete the ENROLL or VERIFY process. This can be a direct link, a button click event, or an automatic redirection based on application logic.

Handling the Callback

The callback parameter in the URL is crucial as it defines where the user is redirected after completing the verification process. Ensure your application is prepared to handle this callback URL:

- Capture query parameters or POST data returned to the callback URL.

- Process the verification results according to your application's logic (e.g., updating user status, displaying a success message).

Additional Tips

- Custom Parameters: Utilize the

Parametersfield in the ENROLL flow to pass any additional information specific to the transaction or user. This field must be in JSON format. - Risk Management: The

riskIdparameter allows you to specify the risk level of the transaction. Use this to adjust the verification process according to your security needs. - User Experience: Consider the user journey through the verification process. Provide clear instructions and support to ensure a smooth experience.

By following these steps, you can successfully integrate the Full Experience for identity verification into your web application, enhancing security and user trust in your platform.

KYC Ecuador Flow

Integration Guide for Identity Validation Flow for Ecuador

This guide offers a detailed approach to integrating a specialized identity validation flow tailored for Ecuadorian users. This process stands out by authenticating users through real-time validation of their facial features, comparing them against the official data provided by the Civilian Registry of Ecuador. By adhering to a proven framework used in classic verification flows, this integration is adapted to meet the unique requirements of users from Ecuador, ensuring a secure and efficient verification process.

Overview

The identity validation flow for Ecuador leverages advanced facial recognition technology to compare a user's live-captured photograph against identity data from the Civilian Registry of Ecuador. This comparison ensures that the person attempting to verify their identity matches the official records, thereby enhancing security and trust in digital platforms.

Key Steps for Integration

-

User Consent and Instruction: Begin by informing users about the process and obtaining their consent. Clearly explain the need for a facial photograph and how it will be used for verification purposes. Ensure users understand the importance of clear lighting and a neutral background for the photograph.

-

Capture and Submission: Implement a user-friendly interface that guides users through the photograph capture process. This interface should include real-time feedback to help users position their face correctly within the designated area. Once the photograph is captured, it, along with any necessary identification information (e.g., unique identification number), is submitted for verification.

-

Real-Time Verification: Upon submission, the system processes the photograph and identification information, comparing them against the data provided by the Civilian Registry of Ecuador. This step utilizes facial recognition algorithms to ensure a match between the live-captured photograph and the official records.

-

Verification Outcome: The result of the verification process is communicated back to the user and the platform in real-time. A successful verification confirms the user's identity matches the official records, while any discrepancies are flagged for further review.

Implementation Considerations

-

Privacy and Data Protection: Ensure the process complies with local and international data protection regulations. User data, especially biometric information, should be handled with the utmost care, ensuring privacy and security.

-

User Experience: Design the verification process to be as intuitive and straightforward as possible. Minimize user effort and provide clear instructions and feedback throughout the process.

-

Technical Integration: Depending on your platform's architecture, choose the appropriate method (GET or POST) for submitting the verification request. Ensure your system is capable of handling the response, whether it's a direct callback or a JSON object containing the verification outcome.

-

Testing and Quality Assurance: Before launching the integration, conduct thorough testing to ensure accuracy in the verification process and a smooth user experience. Consider various user scenarios and edge cases to refine the process.

By following this guide, you can integrate a robust and efficient identity validation flow into your platform, specifically designed for Ecuadorian users. This process not only enhances security by leveraging real-time data from the Civilian Registry of Ecuador but also offers a seamless and user-friendly experience, building trust and confidence among your user base.

Step 1: Preparing for Integration

Before initiating the integration process, ensure you have the following:

- Access to the base URL for the identity verification service.

- An API key and project name provided by the service provider.

- Understanding of the specific parameters required for the Ecuadorian identity validation flow.

Step 2: Constructing the Request

The identity validation process can be initiated using either GET or POST methods, depending on your application's architecture and preferences.

For the GET Method:

Construct a URL with the required parameters appended as query strings. The basic structure is as follows:

URL_Base/validar-rostro-persona?callback=URL_CALLBACK&key=API_KEY&projectName=PROJECT_NAME&product=PRODUCT&Parameters=PARAMETERS&riskId=RISK_ID

For the POST Method:

If you prefer using POST, your application will need to send a request to URL_Base/validar-rostro-persona/ with the parameters included in the body of the request.

Parameters:

callback: The URL to which the user will be redirected after the verification process is completed.key: The API key assigned to your project.projectName: The name of your project.product: The product number for the transaction.Parameters: Additional custom parameters in JSON format, associated with the transaction. This is optional.riskId: The transaction's risk level identifier. If not specified, a default level is assumed.

Step 3: Handling the User Experience

-

User Consent: Inform the user about the minimum conditions required for capturing the facial photograph with Liveness detection. The browser will request permission to access the device's camera and location.

-

Capture Process: After granting permission, the user will be prompted to capture their photograph by clicking on "capturar fotografía". They must keep their face within the on-screen oval until the internal clock completes.

-

Data Entry: On the Identification Data screen, users must enter their unique identification number and individual fingerprint code to proceed with the identity validation by pressing "Continuar".

-

Completion: Upon completion, users will see a summary screen indicating that the transaction has finished successfully.

Step 4: Receiving the Response

After the user completes the process, your application will receive a JSON object at the specified callback URL. The JSON structure includes the transaction's outcome and relevant data, such as the id, codeId, and ThresHoldCompareFaces.

Step 5: Retrieving Transaction Results

The Validation method is a crucial part of the identity verification process, allowing you to retrieve detailed information about the transaction and the outcome of the validation. This method is particularly useful for post-verification steps, such as auditing, compliance checks, or further user verification processes. Below, we detail how to use the Validation method with a curl command, which is designed to fetch the results of a specific transaction using a GET request.

Overview

To retrieve the results of an identity verification transaction, you will need the codeId that was provided in the callback after the verification process. This codeId serves as a unique identifier for the transaction, enabling you to query the verification results.

CURL Command Structure

The curl command to retrieve the transaction results is structured as follows:

curl -X GET "{URL_Base}/api/{ProjectName}/Validation/{id}?returnImages=false" \

-H "accept: application/json" \

-H "apiKey: your_api_key" \

-H "returnDocuments: true" \

-H "returnVideoLiveness: false"

Parameters Explained

-

{URL_Base}: The base URL of the identity verification service. This should be replaced with the actual URL provided to you.

-

{ProjectName}: The name of your project as registered with the identity verification service. Replace

{ProjectName}with your specific project name. -

{id}: The unique identifier (

codeId) for the transaction you wish to retrieve. This ID is typically provided in the callback after the verification process. -

returnImages (Query Parameter): Specifies whether to include images in the response. Setting this to

falseexcludes images from the response, whiletrueincludes them.

Headers

-

accept: Indicates the expected media type of the response, which is

application/jsonfor JSON-formatted data. -

apiKey: Your API key for authentication with the identity verification service. Replace

your_api_keywith the actual API key assigned to your project. -

returnDocuments: A header that determines whether document data should be included in the response. Setting this to

trueincludes document data, whilefalseexcludes it. -

returnVideoLiveness: Indicates whether the response should contain video data from the liveness verification process.

trueincludes video data, andfalseexcludes it.

Usage Tips

-

Ensure all placeholders in the

curlcommand are replaced with actual values specific to your project and the transaction you're querying. -

Execute the

curlcommand in a terminal or command-line interface. The server's response will include the transaction details and validation results, according to the parameters you've set. -

Carefully process the JSON response to extract and utilize the verification information as needed in your application or for compliance purposes.

By following these guidelines and using the corrected URL structure and parameters, you can effectively retrieve detailed information about identity verification transactions, enhancing your application's security and user management processes .

KYC Ecuador + Document Capture Flow

Integration Guide for Identity Validation Flow for Ecuador + Document Capture

This guide outlines the integration of a specialized identity validation flow designed for Ecuadorian users. This enhanced process is distinguished by its ability to authenticate users in real-time by capturing their facial features and an image of their identification document. Unlike traditional verification flows that may compare document information against official records, this streamlined approach focuses solely on capturing the document's image without validating its data. This adaptation ensures a secure and efficient verification process, tailored to meet the unique needs of users from Ecuador, while simplifying the steps involved in identity verification.

Overview

The identity validation flow for Ecuador leverages advanced facial recognition technology to compare a user's live-captured photograph against identity data from the Civilian Registry of Ecuador. This comparison ensures that the person attempting to verify their identity matches the official records, thereby enhancing security and trust in digital platforms.

Key Steps for Integration

-

User Consent and Instruction: Begin by informing users about the process and obtaining their consent. Clearly explain the need for a facial photograph and how it will be used for verification purposes. Ensure users understand the importance of clear lighting and a neutral background for the photograph.

-

Capture and Submission: Implement a user-friendly interface that guides users through the photograph capture process. This interface should include real-time feedback to help users position their face correctly within the designated area. Once the photograph is captured, it, along with any necessary identification information (e.g., unique identification number), is submitted for verification.

-

Real-Time Verification: Upon submission, the system processes the photograph and identification information, comparing them against the data provided by the Civilian Registry of Ecuador. This step utilizes facial recognition algorithms to ensure a match between the live-captured photograph and the official records.

-

Verification Outcome: The result of the verification process is communicated back to the user and the platform in real-time. A successful verification confirms the user's identity matches the official records, while any discrepancies are flagged for further review.

Implementation Considerations

-

Privacy and Data Protection: Ensure the process complies with local and international data protection regulations. User data, especially biometric information, should be handled with the utmost care, ensuring privacy and security.

-

User Experience: Design the verification process to be as intuitive and straightforward as possible. Minimize user effort and provide clear instructions and feedback throughout the process.

-

Technical Integration: Depending on your platform's architecture, choose the appropriate method (GET or POST) for submitting the verification request. Ensure your system is capable of handling the response, whether it's a direct callback or a JSON object containing the verification outcome.

-

Testing and Quality Assurance: Before launching the integration, conduct thorough testing to ensure accuracy in the verification process and a smooth user experience. Consider various user scenarios and edge cases to refine the process.

By following this guide, you can integrate a robust and efficient identity validation flow into your platform, specifically designed for Ecuadorian users. This process not only enhances security by leveraging real-time data from the Civilian Registry of Ecuador but also offers a seamless and user-friendly experience, building trust and confidence among your user base.

Step 1: Preparing for Integration

Before initiating the integration process, ensure you have the following:

- Access to the base URL for the identity verification service.

- An API key and project name provided by the service provider.

- Understanding of the specific parameters required for the Ecuadorian identity validation flow.

Step 2: Constructing the Request

The identity validation process can be initiated using either GET or POST methods, depending on your application's architecture and preferences.

For the GET Method:

Construct a URL with the required parameters appended as query strings. The basic structure is as follows:

URL_Base/validar-rostro-documento-persona?callback=URL_CALLBACK&key=API_KEY&projectName=PROJECT_NAME&product=PRODUCT&Parameters=PARAMETERS&riskId=RISK_ID

For the POST Method:

If you prefer using POST, your application will need to send a request to URL_Base/validar-rostro-persona/ with the parameters included in the body of the request.

Parameters:

callback: The URL to which the user will be redirected after the verification process is completed.key: The API key assigned to your project.projectName: The name of your project.product: The product number for the transaction.Parameters: Additional custom parameters in JSON format, associated with the transaction. This is optional.riskId: The transaction's risk level identifier. If not specified, a default level is assumed.

Step 3: Handling the User Experience

-

User Consent: Inform the user about the minimum conditions required for capturing the facial photograph with Liveness detection. The browser will request permission to access the device's camera and location.

-

Capture Process: After granting permission, the user will be prompted to capture their photograph by clicking on "capturar fotografía". They must keep their face within the on-screen oval until the internal clock completes.

-

Data Entry: On the Identification Data screen, users must enter their unique identification number and individual fingerprint code to proceed with the identity validation by pressing "Continuar".

-

Completion: Upon completion, users will see a summary screen indicating that the transaction has finished successfully.

Step 4: Receiving the Response

After the user completes the process, your application will receive a JSON object at the specified callback URL. The JSON structure includes the transaction's outcome and relevant data, such as the id, codeId, and ThresHoldCompareFaces.

Step 5: Retrieving Transaction Results

The Validation method is a crucial part of the identity verification process, allowing you to retrieve detailed information about the transaction and the outcome of the validation. This method is particularly useful for post-verification steps, such as auditing, compliance checks, or further user verification processes. Below, we detail how to use the Validation method with a curl command, which is designed to fetch the results of a specific transaction using a GET request.

Overview

To retrieve the results of an identity verification transaction, you will need the codeId that was provided in the callback after the verification process. This codeId serves as a unique identifier for the transaction, enabling you to query the verification results.

CURL Command Structure

The curl command to retrieve the transaction results is structured as follows:

curl -X GET "{URL_Base}/api/{ProjectName}/Validation/{id}?returnImages=false" \

-H "accept: application/json" \